During the last couple of years VR has been a hot topic. Many of us have already tried it, and the ones who haven’t, definitely have read at least a few articles about it.

The Rift will be out this month, HTC Vive the following month and GearVR is already available, so there is a high chance that a client will ask you to build one of those experiences as soon as next quarter. This will not be an overview of the devices, and definitely is not a blog post for consumers, what we’re going to do here is to take a look at how things work. What are the limitations of VR, and how far can you push this new technology?

How the hardware works

The current generation of VR devices mainly consists of Head Mounted Displays or HMDs. They are devices worn on the head with a display covering your field of view, while completely blocking out everything else.

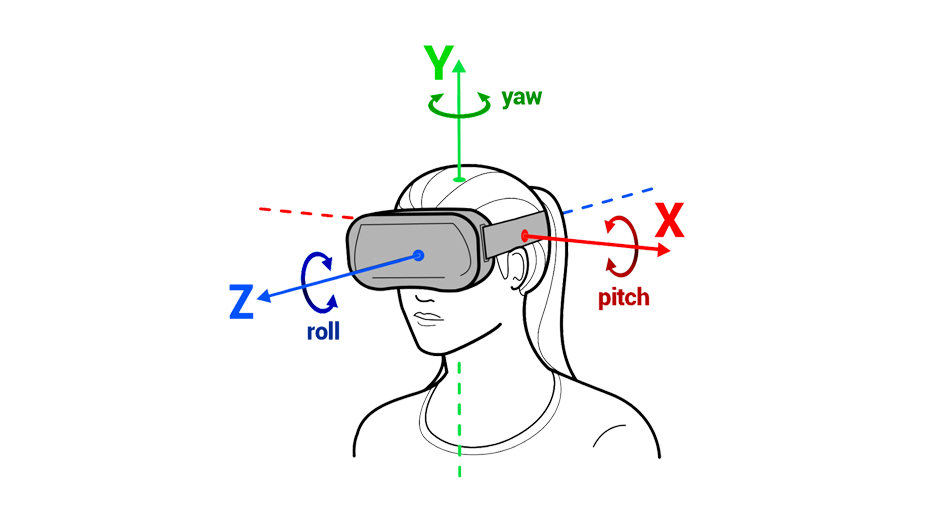

A gyroscope, an accelerometer and a magnetometer are combined to enable you to rotate your head on all three axes. These movements are called pitch, yaw and roll. This gives you freedom to look around, but if you move your shoulders, you'll notice that this movement is still not quite right. For that reason, Oculus, HTC and Sony have started to build position trackers so your movements can be more accurately traced.

HTC’s idea was the most ambitious of them, giving you the ability of walking inside a room, so that your shoulder movement is not limited, it’s Kinect for 2016.

Combining all these sensors you'll get what is called 6-dof - 6 degrees of freedom - which means you can go forward, backwards, to the left, right, pitch, yaw and roll.

Most companies which are building VR headsets have also created new controllers. Those controllers incorporate gyroscopes and haptic feedback to give you more control of your hands than a regular joystick would.

Besides that, some companies are relying on the classic controller designs we have been using for decades and that’s totally fine, but sometimes you just want to sit on the couch and press some buttons.

What the software does

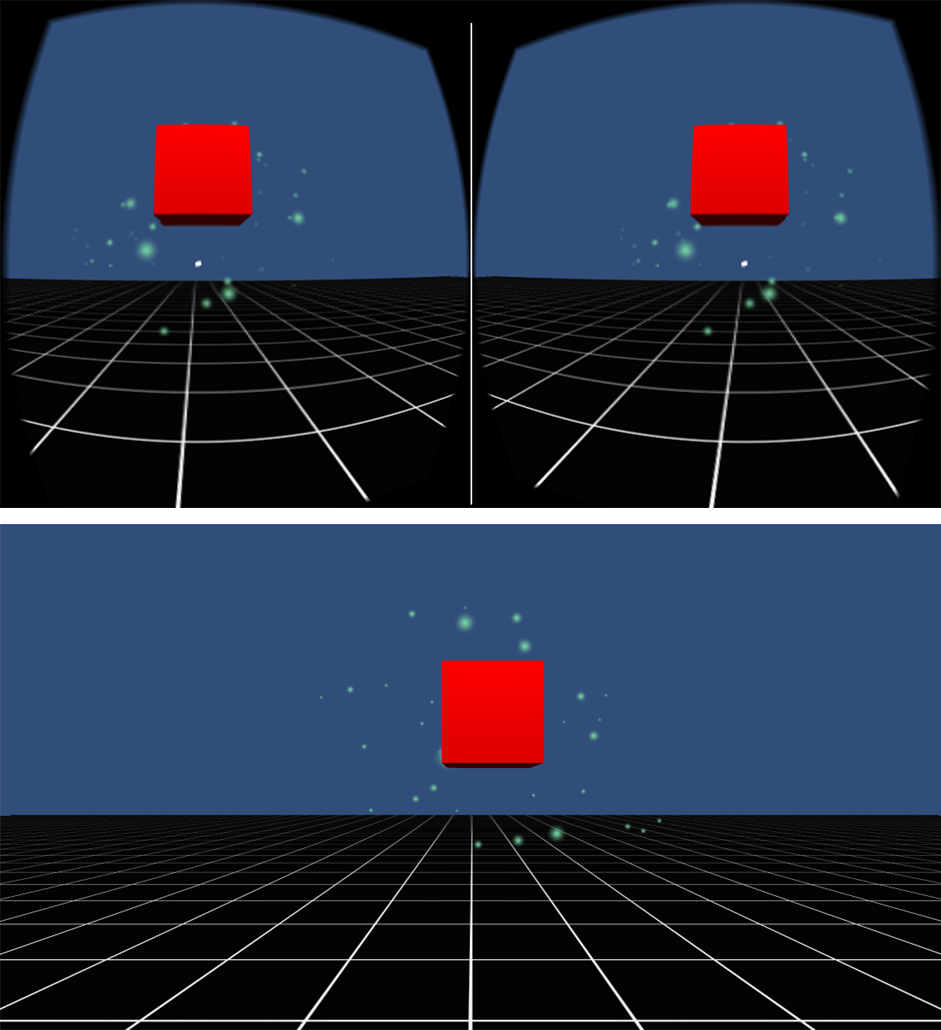

In real life your left eye sees an image slightly different from the one that your right eye sees, and this gives you a sense of depth. This is also done in VR. The camera of your scene will be rendered twice from different positions to reflect the distance between your eyes.

These images are viewed through lenses, and since pixels are made of LEDs, their colors and shape gets distorted. The first step to correct this distortion is to minimize the fringe around objects - balancing Chromatic Aberration (think of it as an image filter). After that it's necessary to compensate the shape of the lenses using barrel distortion.

This is what an image looks before and after being processed for VR:

Making you feel immersed

You predict everything: how objects move, where they will be after a few seconds, where your hand will be because you just decided to raise it... Changes in your field of view are expected every time you turn your head. If it doesn’t happen or happens at a different ratio, you will be disconnected from the experience, or get disoriented. The technical name of it is: simulator sickness, but you can call it motion sickness too.

This also means that 30fps are not enough anymore. Being attached to your head and responding to your movements requires software to be way more responsive than the average game, the minimum frame rate is now 60fps and the recommended - by Sony - is 90fps.

This becomes a bigger problem because you have to render the scene twice, once for each eye, and you end up sacrificing your graphics.

Be aware that you won’t be able to have the same graphics quality you are used to, at least for now. This is an important limitation you have to consider while you conceive your VR experiences. It’s time to revisit 1999 bag of special effects.

Interaction models

We’re still discovering what works and what should be avoided, but there are things we already know:

- The user’s gaze may be used as a cursor

- Show a pointer when a user can interact with objects but hide it to avoid visual clutter

- Prefer camera movements with constant speed, applying eases when you need to stop

- Try to keep the horizon line stable

- Don’t move the camera when the player is not expecting it

- UIs tend to work better when you attach them to the world. If you want a button, just make one.

- HUDs probably won’t be the best solution for your UI, rethink it

Looking at objects and tapping a touchpad/button has been a common interaction pattern, but it does not replace more sophisticated controllers that enable you to:

- Move and see your arms

- Grab things with your hands

- Move your hands independently

- Walk in a room

Oculus has great guidelines, I recommend that you read them.

Videos in VR

360 videos are great but they are not virtual reality. They are only content that can be consumed with VR headsets, and even though they can be stereoscopic, they are still flat. Things really shine when you’re less passive and can grab objects and interact with them. Think of ways to really use the space instead of just being there. Combining video surfaces with a 3D environment works better than just playing something, but you’ll need a developer.

How to start building things?

Currently the fastest path to start is to use a game engine like Unity or Unreal. You have native development toolkits but even headset makers recommend using game engines. All the complex parts were already abstracted so you can focus on the creative stuff.

WebVR is not ready yet. Actually, the most expensive MacBook Pro can’t even run native Vive games. If you want something now, go native and use a PC.

Try it

If you don’t have a Rift or a Vive, get a cardboard or a Gear VR and play at least a dozen games. Get lost in the virtual world before thinking you know how it works. Reading and watching videos help a lot but it won’t give you the experience you need.