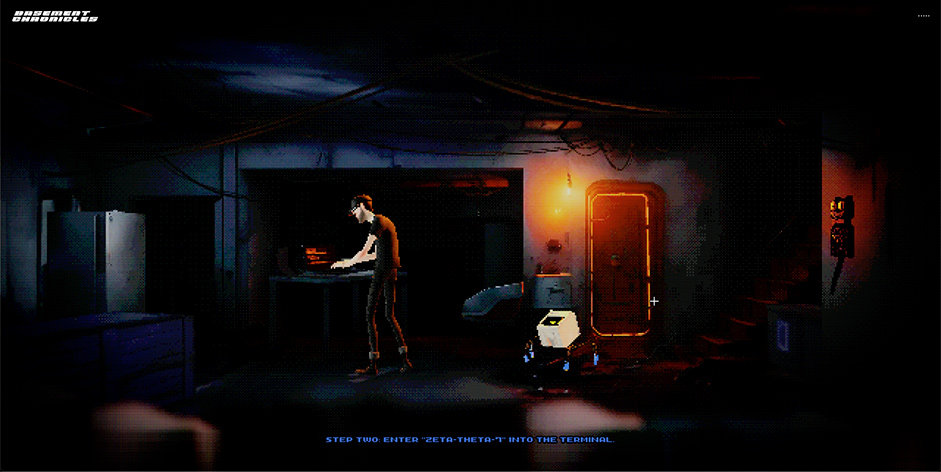

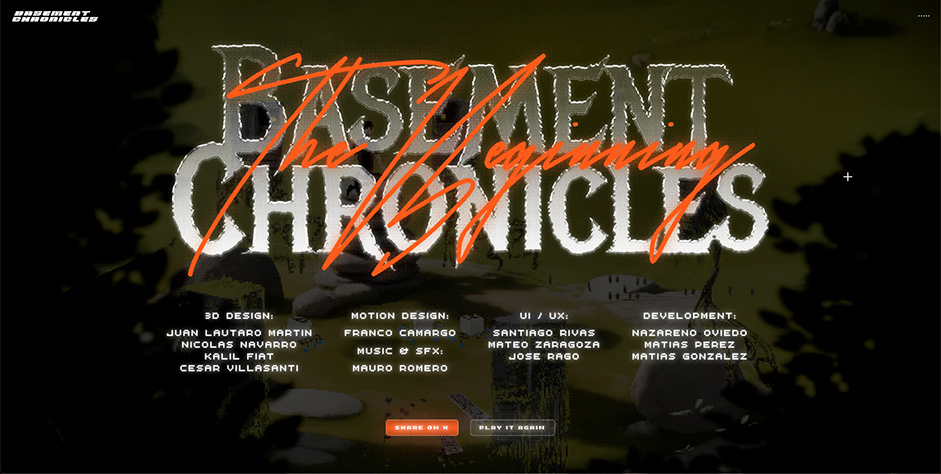

Here at basement, we are fueled by nostalgia. A while ago, we started exploring new tools to make an immersive experience, and before we knew it, we were hooked on creating a retro, pixelated point-and-click game right on the browser.

Through our laboratory, we often venture into creative explorations to unearth valuable gems. On this project, we uncovered new approaches and unlocked hidden talents within our team. We're thrilled with the results and can't recommend the process enough. But we're not stopping there. We embarked on this journey not just for ourselves but to share our findings. So, get ready to delve into our discoveries.

Envisioning Chronicles

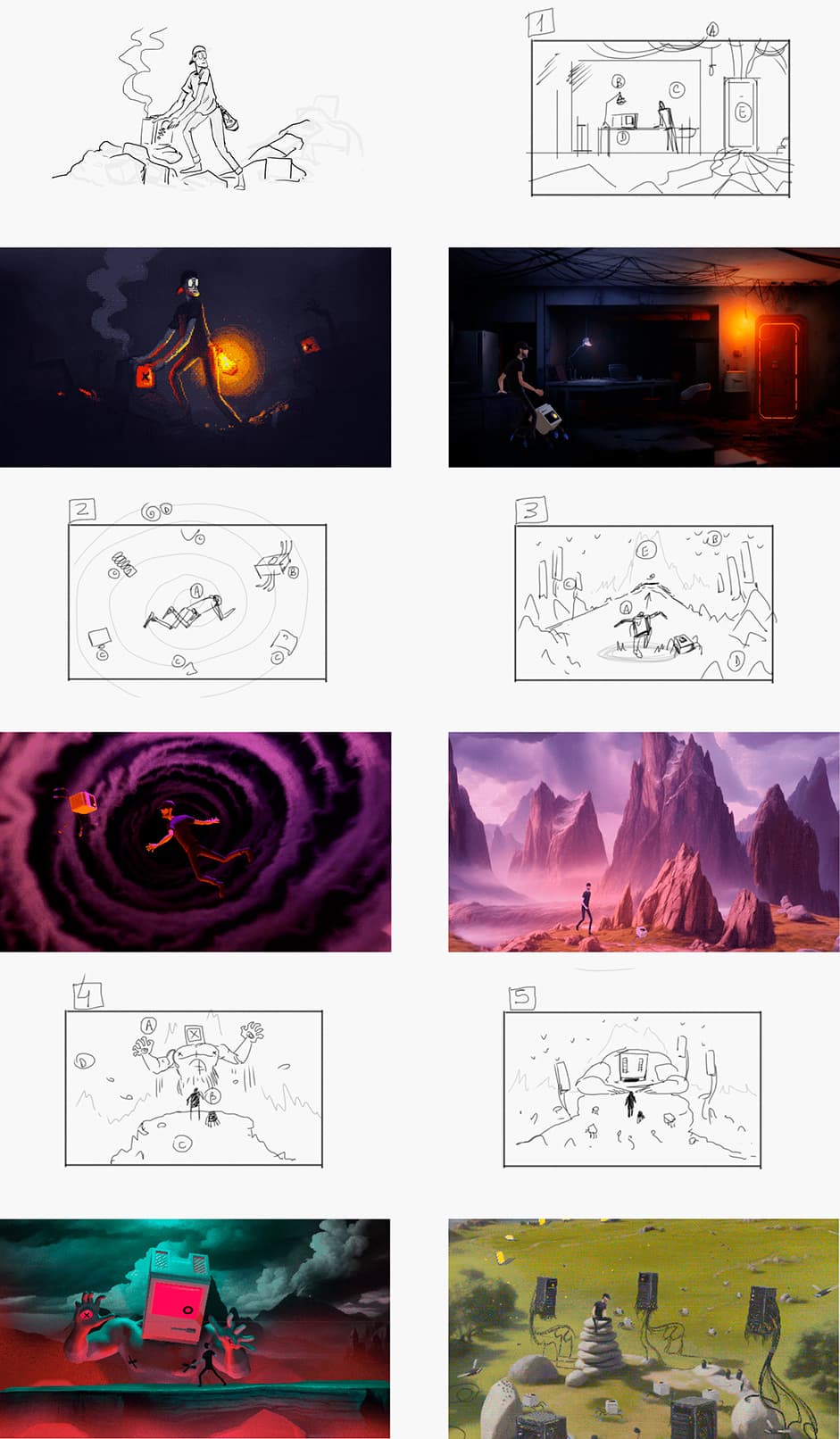

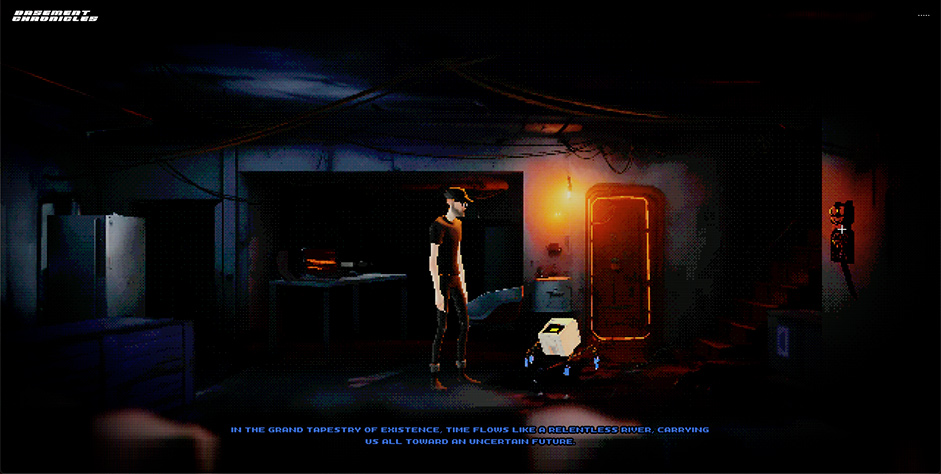

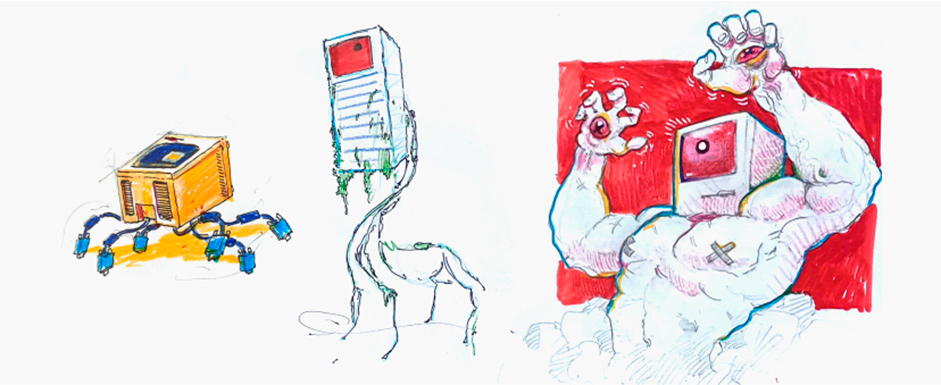

Let's dive into the conceptualization phase to capture the essence of our journey. This tale is about reverse engineering everything - from the initial pencil and paper sketches to the final render on the browser. We traversed through numerous phases, each influencing the next and the previous. Every decision we made had a domino effect, so we tread carefully.

We started by brainstorming a script, putting together a mood board, and crafting a main storyboard, all based strictly on our character dialogues. Worth mentioning is that this story does feature characters from our PC game, Looper. Thus, you can think of this project as an exciting extension of that original narrative and lore.

“Every decision we made had a domino effect, so we tread carefully."

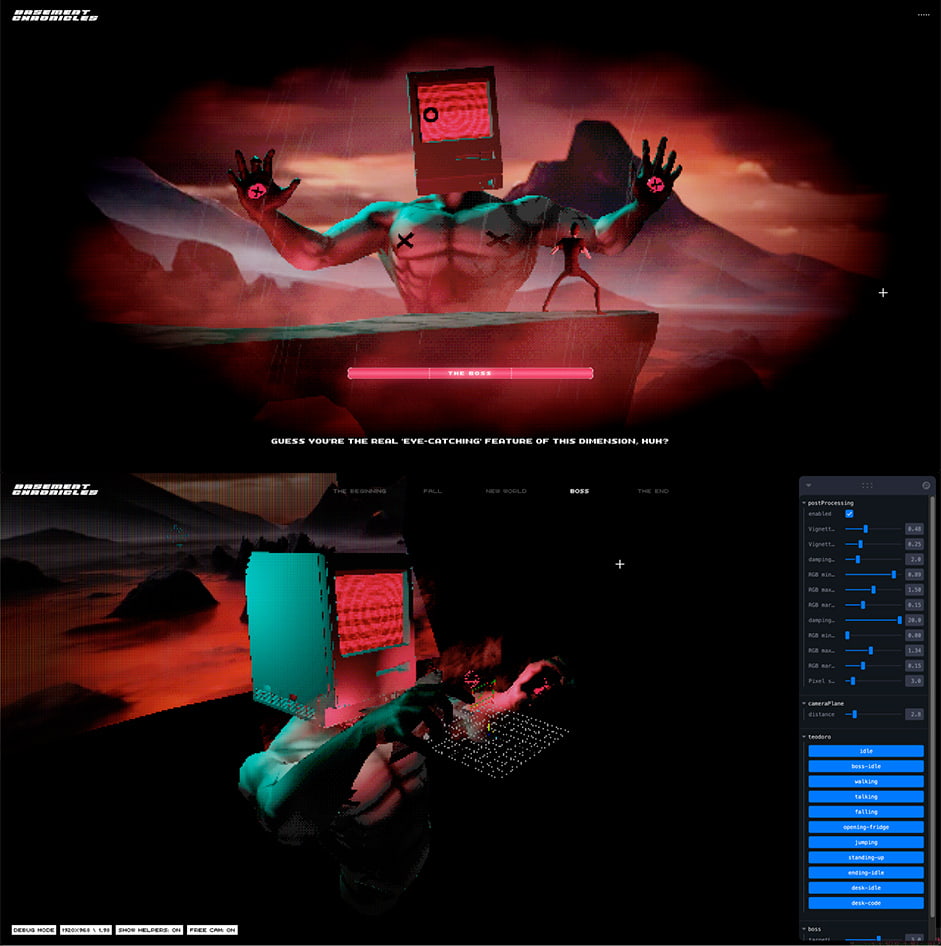

When we went for the production process, we first transformed our hand-painted concept scenes into 3D models to set some boundaries. Next, we used AI to refine the overall look and feel. Using Leonardo, we curated a roundup based on multiple output iterations. Finally, we returned to Photoshop to fine-tune the assets and prepare them for the next phase.

Fake It Till You Make It

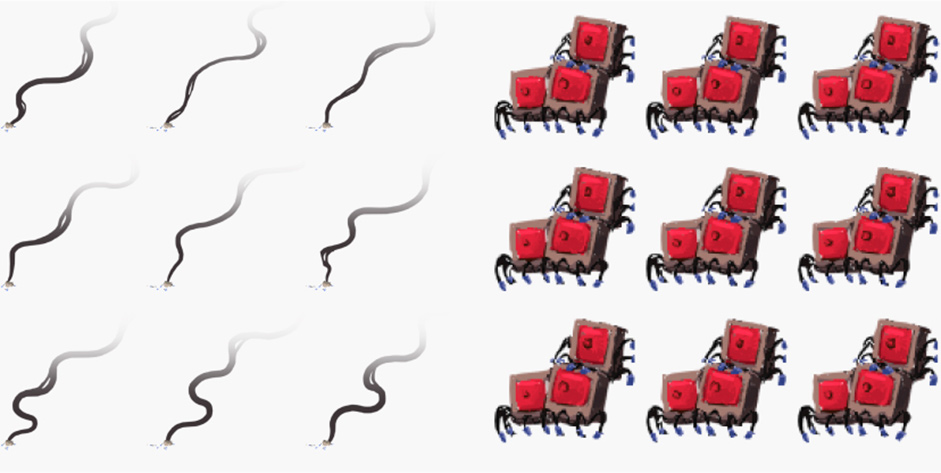

Okay, this is where we hit some serious challenges: We had to juggle a wide range of assets, from rigged 3D models, 2D sprite sheet animations, static PNGs, shaders, bezier curves, and more. The question was, how could we seamlessly merge all of these elements in a single scene?

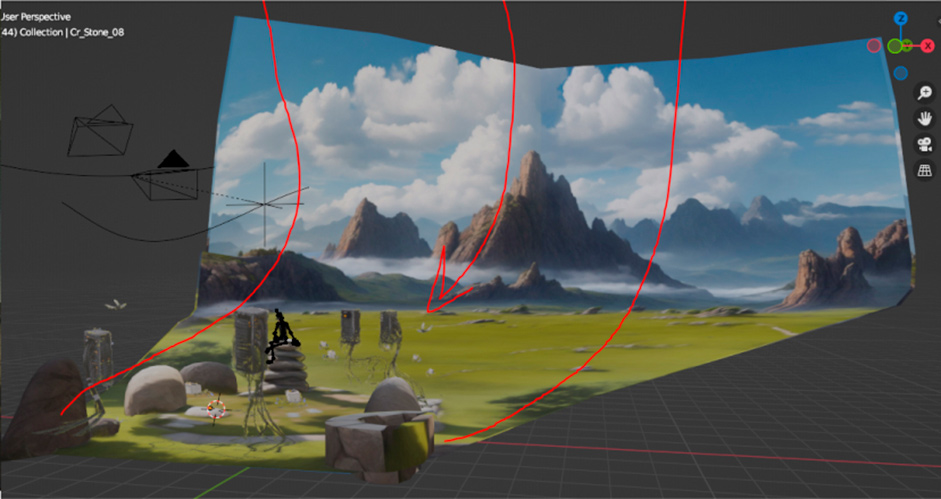

We were tasked with filling the canvas with a polished, final view. After testing several approaches and struggling to handle element superposition, we decided to tackle a challenging approach: Create a low-poly perspective projection for all scenes and place all elements within it.

We couldn't just present our scene as it came. Therefore, we projected it onto a plane to give it a normalized look for the viewer. This kind of 3D scenography involves clever tricks to create a convincing view from the camera's perspective.

If you take a closer look at the scene from different points, you'll notice some intentional proportional aberrations, similar to what you might see in games. For instance, from the camera viewpoint, a character might appear to be interacting with the laptop, even though he’s not directly in front of it. It's similar to the optical illusion tourists experience taking photos at the Leaning Tower of Pisa.

By doing this, we could position some elements closer to the camera and keep them in the same structure as the overall environment. We set different layers for the landscape and the foreground, putting the characters in between. The result? A seamless parallax experience that reacts to mouse movement and camera position, perfectly blending all elements of the experience.

The Devs Enter The Game

The development team had many things to tackle. We needed to implement post-processing effects for the final render and manage the camera for perspective projection, and, oh boy, we had to build a system to debug this complex stream of visual assets, right?

Okay, we kicked things off with a critical decision: creating a filter to fuse our array of layers together on screen. Since we knew we'd be using post-processing, we had to nail this down early as it would impact all other assets, such as resolution, size, and color depth.

“Since we knew we'd be using post-processing, we had to nail this down early as it would impact all other assets"

We chose pixelated dithering with an indexed color encoding of 64 reduced values. This meant getting to grips with some math and learning to implement a custom dithering effect. We then crafted a shader where each pixel is roughly 3x3. This approach achieved our desired nostalgic aesthetic and helped us set a file size limit for optimal performance.

And guess what? This process reshaped the overall output again, which meant we had to start building some browser tools for testing. Let's dive in!

Orchestrating On The Browser

Alright, at this point we realized we were essentially building 3D software in the browser. Let's talk about the debug mode.

We kicked off with a dedicated branch for post-processing and scene arrangements, so we weren't shooting in the dark. We set up a dashboard of easily accessible helpers under a UI for the team, saving us much time. Take, for example, an easily accessible toggle for activating an orbit controls instance. Setting the ability to save it to local storage, even on refresh, spared us from manually creating it every time we needed to debug.

“We're essentially building 3D software right in the browser"

Another handy trick was leveraging Yomotsu's camera controls from the R3F Drei library to build our camera system. This saved our devs from setting views manually and solved many complex geometry calculations.

Seems cool, huh? Wanna test this debugging experience? Just head over to /?debug=true. You can even play the entire game in this mode. It’d be like choosing the 'hard mode.'

More Dev Challenges

We faced some intriguing challenges beyond just debugging. For instance, syncing the GSAP and R3F timers was tough. We found out that the GSAP clock stops when tweens aren’t running for a while. But what if you need that timer for something else? Well, we had to adjust the autosleep configuration and synchronize it with our WebGL ticker. This allowed us to run some background shaders without any freezes.

Another big challenge was programming the point-and-click behavior. We created a hook named 'useStory' to keep events and states in sync, encapsulating our logic in a scalable way. Drawing inspiration from GSAP timelines, we built a system rooted in a promise chain. This approach strictly maintained the order of the story sequence. As certain promises, like a character's dialog, were fulfilled by user interaction, they would unlock subsequent elements in the game.

And lastly, we also had a blast revisiting some of our previous experiments from the basement laboratory. You can spot our bezier curve toolset in action to run Theodoro's walking animation path and again in the trajectory of the flying birds.

No 16-bit Music, No Retro Game!

We knew we couldn't deliver anything retro without a killer low-bit soundtrack. That's why we brought Mauro into a dedicated pod for music production and SFX. We aimed for an SNES vibe with the music, so creating directly in MIDI and working in 16-bit seemed like a dream. Though this approach kept the file size light, it posed a problem: playing MIDI in the browser required loading the sound library, which, in our case, weighed in at around 17 MB. To tackle this, we had to sample all the sounds to MP3 to make them accessible. Now, with our soundtrack downsized to a user-friendly 3 MB, why not head over to SoundCloud and give it a spin?

But our sound design journey didn't stop there. What about dialogues? We leveraged Eleven Labs voice technologies to iterate and polish Theodoro's voice. We generated a bunch of variants by adjusting pitch and velocity until we hit the right tone. Much like the visual processing we did with AI, having numerous outputs to curate from really helped us find what best resonated with our human senses. As for Patas’ voice, we twisted the real script until we achieved a robotic sound wave that could resemble our beloved R2D2.

A Pixelated Tribute

Now, what have we accomplished so far in a single website? These chronicles go beyond Theo and Patas. Here, you'll find trained AI using our own artwork painted in Photoshop and then projected onto a perspective plane. You'll also spot classic 2D sprite sheet animation techniques coexisting with intricate 3D models and rigs.

Enjoy a seamless experience with clever loading states, cool retro music produced in-house, and a complete JavaScript game to defeat the final boss. And to top it all off, this magic is presented in a super cool pixelated output that steals our nostalgic hearts. We hope you can feel the vibes we had when creating this. We’re just eager for more tributes to those games that shaped our childhood.

Tech Stack

Creating a game for the browser comes with its fair share of interesting challenges and demands a diverse toolkit. We particularly enjoyed testing new software and cutting-edge tech like AI. Our creative flow primarily involved tools like Figma, Photoshop, and Blender, while we relied on Leonardo AI and Eleven Labs for AI generative processing. On the development front, we can't sing enough praises for our favorites: React Three Fiber with Drei and PostProcessing, Three.js, GSAP, Next.js, and Vercel.

Company Info

We are a small but mighty multi-disciplinary digital studio based in Mar Del Plata, ARG, and Los Angeles, US. We believe creativity is the central driving force for positive impact. We partner with leading cultural engineers to move the world forward through the convergence of creativity and technology.